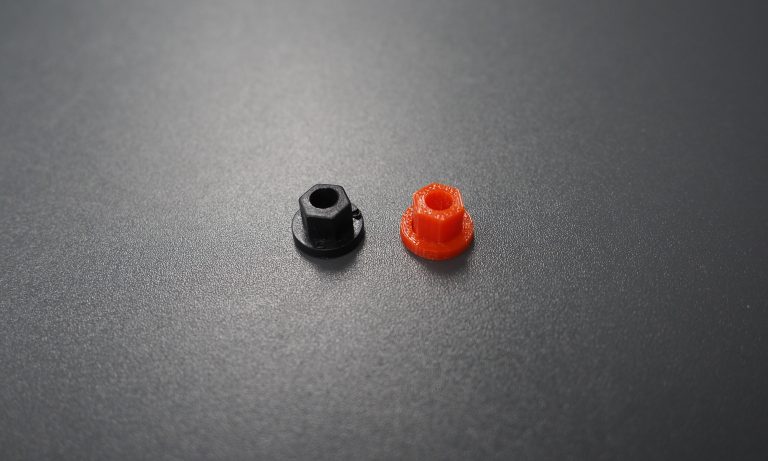

3D printing has gaining popularity in the recent years, and since a few years ago, Richmond Public Library has had ‘MakerBot Replicator 2’ stations available for patrons to use to create their own objects. This week, I had the opportunity to use the 3D printers for the first time to print a replacement part for a projector screen. Backstory Some time in March, one of these plastic caps popped off the projector screen we use for youth ministry at St. Francis Xavier Parish, and disappeared. It turns out that this small part (about 1cm in diameter), is a critical component Continue Reading

Computers & Technology

Overriding Routing for VPNs on macOS

I have a Virtual Private Network (VPN) setup so that I can connect to my home network and use things such as my Synology file server when I’m not at home. This works most of the time when the IP address network of the local (e.g., Wi-Fi hotspot, etc.) doesn’t conflict with my home’s IP address network (10.x.y.0/24). However, I have come across some Wi-Fi hotspots which use a subnet of 10.0.0.0/8. The default route through the hotspot network is then used when I try to access my home resources, instead of going through the VPN.

Suspending Browser Tabs for Memory Conservation

Update: As of February 2021, The Great Suspender is no longer available due to the releases being overtaken by a malacious owner. Modern Chrome versions are said to have better memory management anyway, so you may find a separate extension unnecessary. If you’re like me and have upwards to 30-50 browser tabs open at the same time, you may notice that your computer becomes sluggish. In my case this was because all the tabs still consume memory even though I might not need them for some period of time. I still like to keep some tabs that I might need Continue Reading

Synology Hyper Backup Options and Pricing

The Synology Hyper Backup app allows owners of Synology NAS devices to easily set up backups to various cloud services. However, one thing that isn’t shown in the app is the pricing of each service. So here’s a pricing comparison (prices as of Aug 4, 2018). Synology C2 Location: Frankfurt, Germany 100 GB = €9.99/year (approx. USD $0.0100/GB/month) 300 GB = €24.99/year (approx. USD $0.0081/GB/month) 1 TB = €59.99/year (approx. USD $0.0058/GB/month) 1+ TB = €69.99/TB/year (approx. USD $0.0068/GB/month) Amazon S3 Location: Many, using U.S. (N. Virginia) to compare Pricing: Complicated – only comparing standard S3 storage costs here USD Continue Reading

Desktop Computer Upgrade

I last posted about my computer specs six years ago when I first built my VMWare ESXi Whitebox server. Here’s an update to what happened to it: From the software point of view, it was all and well for the first 2-3 years. I had FreeNAS, Windows 7 and Windows 8 virtual machines running on it, and some lesser used Ubuntu virtual machines for playing around. With the IOMMU capabilities of the motherboard, I even was able to get the GPU accessible by the Windows virtual machines to use it as a desktop and even play some games on it. Continue Reading